When doing multiprocessing with serial fabrics and individual processors, the processor boards are connected with some kind of InterProcessor Communications (IPC) mechanism. The specific serial fabric used for IPC will define the latency and determinism characteristics. Here are my definitions of basic multiprocessing architectures:

- Tightly Coupled/Shared-Everything (TCSE) – This is where every processor has direct access to every resource in the system. It operates with an intimate protocol that allows peer-to-peer connections without much overhead. RapidIO, as an IPC mechanism, is the only fabric available that can implement a TCSE multiprocessing architecture. VME is the only bus that can do TCSE architectures. TCSEs are very deterministic and real time.

- Snugly Coupled/Shared-Something (SCSS) – All of the processors must share a mechanism to exchange messages with each other and stay synchronized. Typically, that is a section of memory, though it could also be a segment of a disk. This shared memory can also be made cache coherent so that two or more processors using the same data do not retrieve outdated (stale) data. Cache coherency eliminates the need for semaphores (a flag that tells you if the data is used by another CPU) and guarantees that when the data is accessed, that CPU gets the latest copy of the data. SCSS architectures use a friendly protocol, not an intimate protocol: They cannot access every resource in the system directly. So, that adds back some overhead and latency. InfiniBand and Ethernet with Remote Direct Memory Access (RDMA) are both examples of an SCSS multiprocessing architecture.

- Loosely Coupled/Shared-Nothing (LCSN) – Regular Ethernet with Carrier-Sense Multiple-Access/Collision Detect (CSMA/CD) is an example of an LCSN architecture. It operates with a stranger protocol (that is, every message received is treated as if it came from a stranger, hence the large packet headers for Ethernet messages). This massive protocol overhead adds huge latencies to a multiprocessing architecture. LCSNs are as far away as you can get from any deterministic or real-time behavior in a computer.

As I see it, multicore processing comes in basically two flavors:

- Homogeneous multicore – Multicore chips coming to market all have the same instruction sets/data structures. Depending on how they are coupled (that is, how the IPC works), they have all the same problems the multiprocessing architectures have. The present multicore chips use memory (for message passing) as the IPC mechanism, so they are all SCSS multiprocessing architectures.

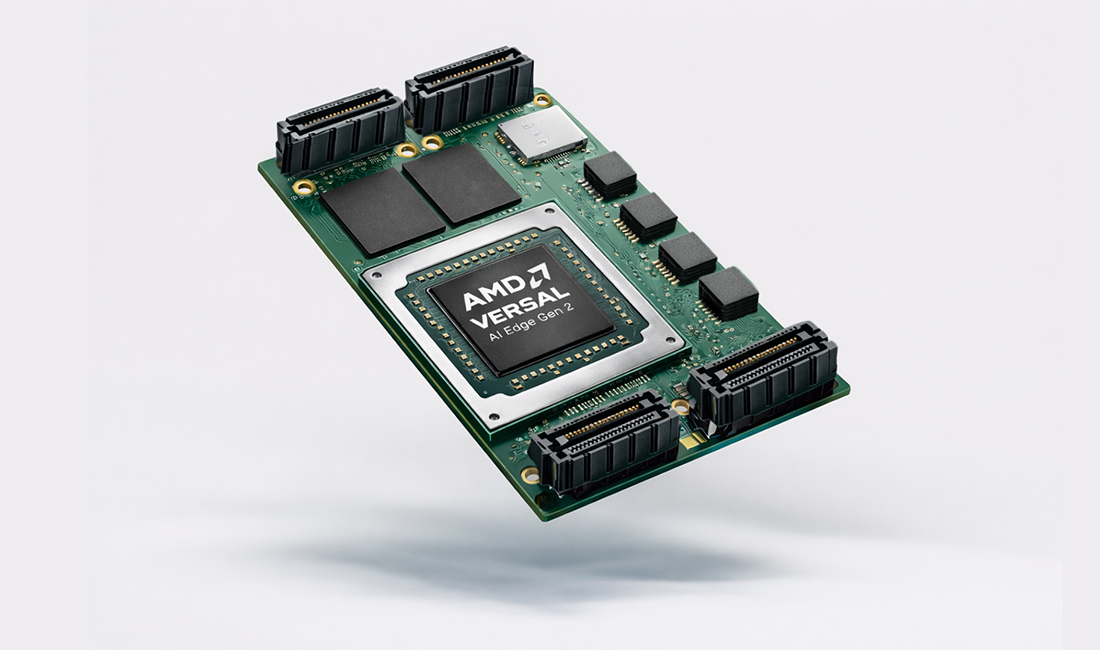

- Heterogeneous multicore – Each processor in a heterogeneous multicore architecture is different. Each has different instruction sets/data structures. It has a common CPU core for applications, a DSP core for math, a graphics core for graphics work, an I/O processor core to handle specific I/O interfaces, and a communications core to handle Ethernet or TCP/IP protocols. Depending on how the IPC mechanism is implemented, it inherits all the same problems in multiprocessing. (See Table 1 for a description of the architectures.)

When we hook multicore processors (with their IPC mechanisms) to multiprocessing fabrics (with their IPC mechanisms), we get a set of stacked latency and determinism problems. We know this from the work done by Sequent, with their Non-Uniform Memory Architecture-Quad (NUMA-Q) architecture. Sequent hooked together four independent homogeneous CPU chips with a cache-coherent memory block shared between them on a single card (a quad). Then they hooked the quad cards together with a high-speed serial fabric with the cache-coherent memory block. Everything works fine until it’s time to access some data that is not in the cache-coherent memory. Then the machine slows to a crawl as the data is retrieved, and all real-time behavior is lost.

As described, we will be building the same NUMA-Q architectures when we use homogeneous multicore processors and multiprocessing fabrics on VXS or VPX. I am not a big fan of homogeneous multicore processors in embedded applications. The embedded market would benefit tremendously from heterogeneous multicore machines. There are VME-based multiprocessing systems installed using a generic CPU for the main application, a graphics processor for the display, a DSP processor for handling the streaming I/O and math, and a communications processor for handling the COMM functions. Hopefully, we will see some heterogeneous multicore processors come to market, and that will tremendously increase the demand for VXS and VPX systems. In the meantime, we will use homogeneous multicore processors and multiprocessing fabric architectures and live with the aberrant behaviors.

The objective of a homogeneous multicore processor is to capitalize on any parallelism in the software, split out that code, and run those segments in parallel on different cores. As for finding parallelism in some chunk of embedded code and running it on a homogeneous multicore machine, forget it.

For more information, contact Ray at [email protected].