What is Intel up to? First, they got out of the InfiniBand business back in 2002 and focused on PCI Express (www.zdnet.com/news/intel-exits-but-infiniband-intact/298696). Then, in the past couple of years, Intel bought five companies who have IP and experience in other fabric-based technologies: Net Effects in 2008 and Fulcrum (who makes Ethernet chips) in 2011. In 2012, Intel bought Qlogic’s InfiniBand group, Cray’s Interconnect Design Group, and Whamcloud.

What will they do with all this knowledge and experience in interconnects?

A peek at the old Sandy Bridge processors, and the new Nahalem CPUs, tells us what they have in mind. Look at the Xeon XL 7500 processor. Internally, you have eight CPU cores, each connected to counter-rotating rings (a baby 2D Torus architecture). Each core has four serial memory channels. There are no front-side or back-side parallel buses on the chip. So externally, all connections to the cores are done with serial channels (www.anandtech.com/show/3648/xeon-7500-dell-r810/3). The cores run at more than 2 GHz each, and the links can provide up to 6.4 GT/s. Each of the CPU chips dissipates about 130 W (www.siliconmechanics.com/files/NehalemEXInfo.pdf).

Intel is aiming these processors at the cloud computing and data center markets. Today, we might have about 20 servers per cubic meter of data center volume. A four-chip Xeon 7500 series server can provide the same compute power in about 1/8 of the volume. So, data centers that have about 50,000 servers in 500,000 square feet today can put hundreds of thousands of servers in that same space tomorrow. This level of compute density will be critical for cloud computing.

Users have no idea what to do with all the extra cores on the new CPUs. So, Intel will use one as an Ethernet controller (a protocol-stack thrashing machine). They will use another core as a disk/SSD storage controller (another protocol-stack thrashing machine). This means that the Network Interface Card (NIC) and the Mass Storage Controller Card (MSCC) will be on the CPU die.

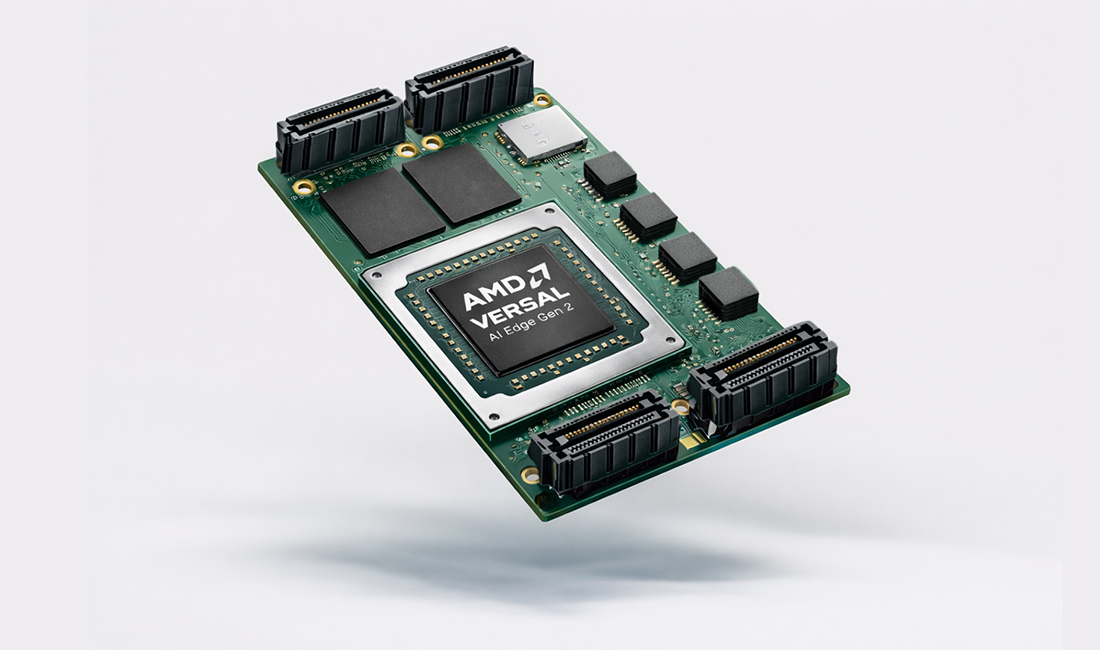

AMD is not ignorant to Intel’s plans. They bought SeaMicro in early 2012. SeaMicro’s Freedom Fabric concept is doing the same thing that Intel is doing: All the connections to the cores are serial, and they are integrating the NIC and MSCC onto their CPU die too. ARM is looking at these massive server architecture concepts, but has not made a decision about their serial fabric channels just yet.

It’s pretty clear that if you are making NICs and MSCCs, or if you are making mezzanine cards with Ethernet and SATA chips onboard, you are going to have big problems in the future. We don’t know all the details of the electrical layers and the protocol stacks on the Intel channels so far. These fabrics on the die are meant purely for chip-to-chip connections. Do they have enough drive current to push the differential signals through some big honking copper connector and down a backplane? Probably not, especially as the channel frequencies rise in the future. If you go off the motherboard with these serial channels, you gotta go optical.

We all remember the strange quirks and head-scratching weirdness of PCI and PCI Express when Intel gave us those. At the High Performance Embedded Computing (HPEC) level, you can bet your highly skilled engineering that when Intel gives us these new CPUs with proprietary serial links and protocols, they will have technical bumps, occlusions, obstructions, Gordian knots, enigmas, race problems, and slips all over them too.