From my reading lately, it looks like the CPU makers are starting their next flap over multicore concepts, homogeneous cores versus heterogeneous cores. In a homogeneous core architecture, all the cores in the CPU are exactly the same, and they have the same instruction set. Of course, this is the Intel approach to multicore. They won the CISC versus RISC battle because of the huge installed base of CISC-based code running on those cheap and slow PCs, so they are playing the same card again in this flap.

One myth about homogeneous-core processors is that you can run the application code through a parallelizing compiler, find those segments that are independent, and run them on different cores simultaneously. That should speed up the code execution dramatically. Well, yes, it would in a perfect world. Gene Amdahl studied this problem for many years, and he discovered that very little applications code had any parallelism that can be exploited. His discoveries resulted in Amdahl’s Law, which showed the computing world that chasing parallelism in code was a great waste of time. So using parallelism as a justification for homogeneous-core architectures is, by definition, another great waste of time.

For many decades, we have been building multiprocessor systems using homogeneous CPU chips. This is the same concept as the new homogeneous multicore CPUs. We discovered the knee in the curve early on, in which each additional processor contributes less than 100 percent increase in performance. We have seen four-processor servers outperform six-processor servers hands down. The overhead associated with interprocessor communications and shared resources exposes such machines to the Law of Diminishing Returns after four CPUs, particularly when parallel buses, shared memory, and cache-coherency protocols are used.

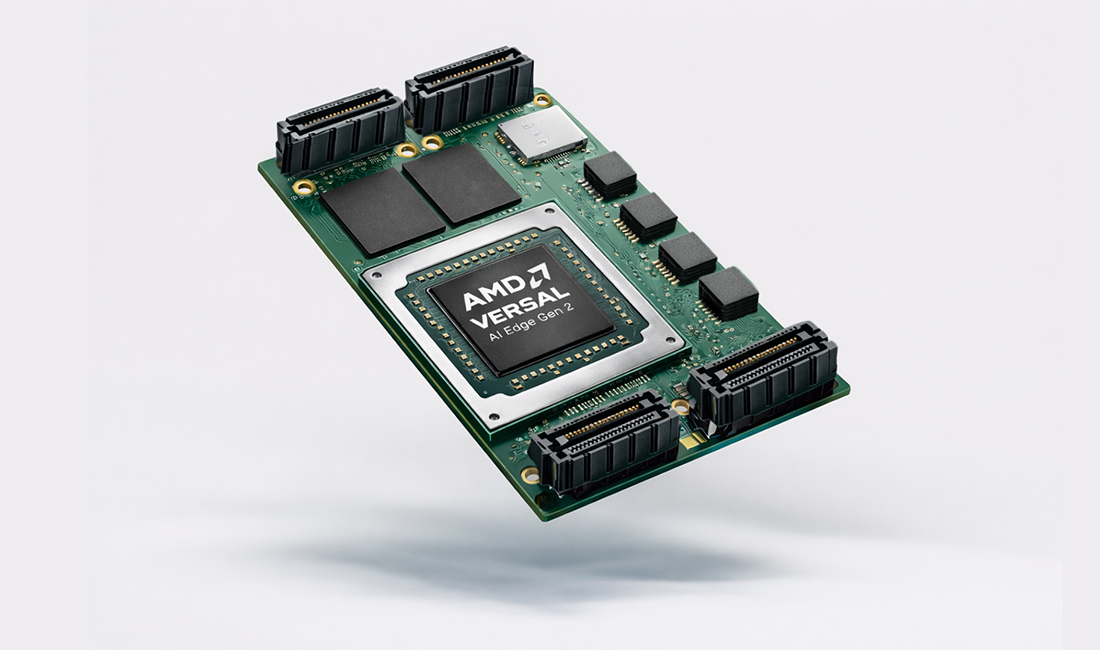

A heterogeneous multicore architecture would consist of highly specialized Application Specific Processor cores (ASPs) with their own unique instruction sets. Consider this: The primary core would be a generic processor for running basic programs. Next, you would have a graphics core to handle all graphics and display algorithms. Additionally, you would have a Protocol Offload Engine (POE), a communications processor core that will handle all communications tasks. Plus, you could have a math-oriented processor core (such as a DSP core) to handle all the heavy calculations that some of the applications programs may have. Now, you have an architecture that can show some serious increases in performance over homogeneous core concepts without all the voodoo parallelism promises.

Yes, you can write all that graphics, communications, and math code and run it on those homogeneous cores. But that code and those cores will show terrible performance compared to a heterogeneous group of ASPs. However, I expect to see Intel maintain their position on homogeneous multicores and either write that code or get Microsoft to write it.

The companies supporting heterogeneous multicore concepts are AMD and IBM, with more joining the party every day. AMD bought ATI (a graphics chip company) a year or so ago, so AMD already has a graphics core. Additionally, AMD has licensed that ATI graphics core to Freescale Semiconductor and will probably license it to more companies. AMD and IBM have packet-thrashing cores, for example, those protocol and communications offload engines from their Ethernet and other communications chips. The DSP cores can be found in many places to handle math-oriented applications, so combining a very efficient and cost-effective heterogeneous multicore processor is relatively easy. And the heterogeneous core concept lets the CPU makers choose best of class cores and software for the other functions, rather than shoehorn some slow, kludgy code into a clumsy and inelegant solution on a homogeneous multicore processor chip. Additionally, I suspect great power and heat dissipation savings are associated with heterogeneous multicore chips.

It is clear to me that the heterogeneous multicore concept makes a lot of sense. But similar to the RISC versus CISC battles of the ’80s, my opinion ignores all that kludgy CISC code running on all those poorly performing PCs available today. To compete with AMD’s heterogeneous core model, Intel would have to buy NVIDIA for big bucks. Or they could take the cheap route and just write some graphics-thrashing code for one of their x86 cores and pawn it off on the market.

This homogeneous versus heterogeneous multicore flap will continue for a while and will surely be interesting to watch. I think if you examine the homogeneous multicore model, you will discover a lot of shortcomings.\