In recent years, a new advanced class of static analysis tools has emerged, proven effective at finding serious programming flaws in large software systems. As a result, these tools are beginning to be used widely for C and C++ development for embedded and safety-critical applications. These tools can find defects without executing the code, so unlike traditional testing, they do not require test cases to be written. They can also explore more combinations of circumstances than can be discovered through conventional testing, so they can find flaws that can be difficult to detect otherwise.

However, all static analysis tools are prone to both false positives (warnings that do not indicate real flaws) and false negatives (bugs that are not found). These cannot be avoided with the technology that is feasible today. However, this new class of tools differs from the previous generation by having a much lower false-positive rate, and by being able to find much more serious bugs. The risk of false positives means that all warnings should be inspected by a human familiar with the code to determine whether they constitute a real problem, so it is important to keep the rate low. One source of both false positives and false negatives is that static analysis tools operate on an abstraction of the program that might leave out concrete details such as those implied by the target architecture. Thus, static analysis tools that address and minimize this problem are vital, as is insight into how these tools operate.

How static analysis tools work

Static analysis tools have their roots in theoretical computer science techniques such as model checking and abstract interpretation. This makes them very different from traditional static analysis tools such as lint. Although lint-like tools can be used for finding some classes of superficial syntactic flaws and stylistic discrepancies – such as the use of old-style declarations or occurrences of the goto statement – they can be painful to configure for effective use. Significant effort is required to integrate with existing build systems that do not already use them. Also, they generate a large proportion of false positives, often well in excess of 90 percent. In addition, examining and suppressing these is tedious and time consuming.

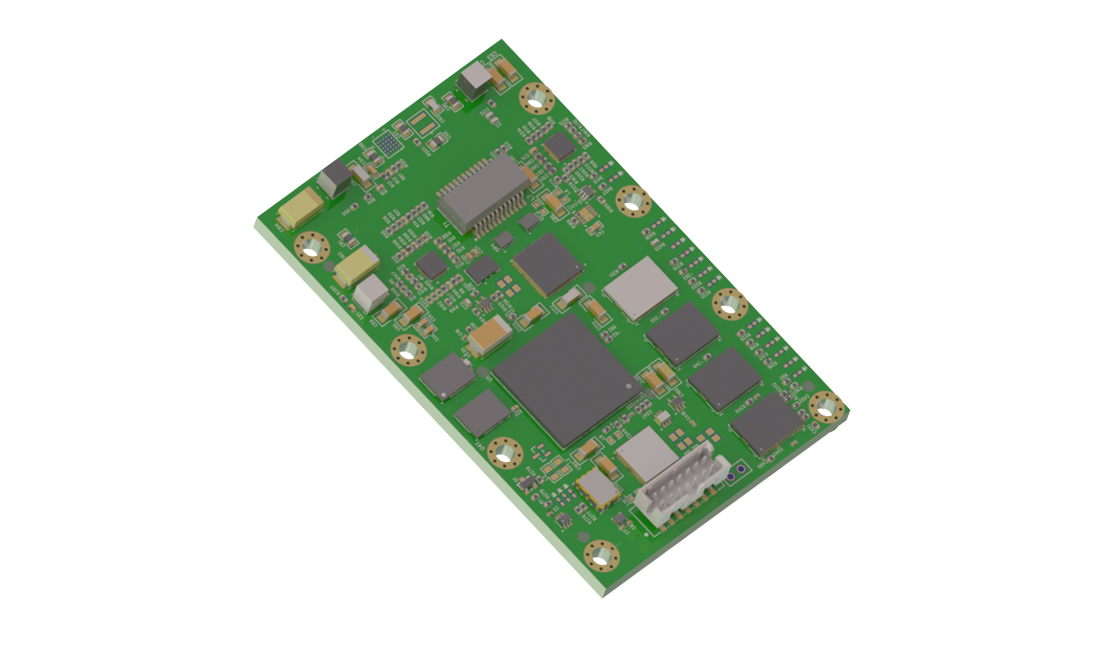

In contrast, these new advanced static analysis tools have been designed to integrate easily, usually without requiring any changes to the build system; they also produce actionable reports with a relatively low level of false positives (10-30 percent is typical). Figure 1 depicts the architecture of one such tool, showing that it integrates closely with the build system by observing how it operates.

These advanced static analysis tools operate by examining the source code of the program in detail. They create a model of the code, and then do an abstract execution of that model. As this pretend execution proceeds, the abstract state of the program is examined. If anomalies are detected, they are reported as warnings.

There are two classes of defects that these tools can detect by default. The first class is where the fundamental rules of the language are being broken, such as buffer overruns, null dereferences, and others. The second class is where the program breaks rules for using standard APIs. Errors in this class include resource leaks and file system mismanagement (double close, race condition, and so on). Good tools also allow users to extend the analysis by writing checkers that find violations of their own set of programming rules.

Figure 2 shows a screenshot of an instance caught by static analysis where a buffer overrun was detected in an application. In this case, the programmer did not allocate enough memory for the newloc buffer because of an accidentally misplaced parenthesis on line 2200. These tools additionally allow users to get a high-level summary of the quality of the program, as shown in Figure 3. This chart shows a summary of which classes of defects were found in which source files.

Impact on target architecture

Static analysis tools must counteract a defect called the What You See Is Not What You eXecute (WYSINWYX) effect for successful execution. Additionally, specific aspects of the target architecture should be considered because they affect the program model. If the tool has an imprecise model of the code, then the results it produces will also be imprecise. It might fail to find some flaws, or it might report flaws where none exist. An accurate model yields much better results.

The WYSINWYX effect

WYSINWYX effect refers to the observation that source-code representations are ambiguous, and that there are properties of compiled code that are entirely invisible in the source code representation. It is only when source is compiled or analyzed that these ambiguities are resolved and other implications become apparent. There are several different causes of this effect:

- Memory layout. The layout of variables in storage is usually not specified in source code, so the choice is left entirely up to the compiler. Some variables may be placed in memory, and others may be placed in registers. The order in which they appear in memory may be different than the order in which they are declared in the source code. A compiler may choose to pad data structures with unused bytes.

- Order of execution. In C, the order of execution is in many cases ill defined, even for seemingly simple expressions. For example, in the expression f()+g(), the order in which those functions are called is determined by the compiler. The same expression in a different context may even have a different order, and worse still is the fact that different compiler optimization levels may yield different orders. Although this only matters when the functions have side effects such as changing the values of global variables, compilers cannot in general determine as much.

- Compiler bugs. Compilers may introduce unwanted effects because they have flaws, too. Consider the following example, which occurred in a login program:

memset(password,'\0',len);

free(password);

As one can observe, the password variable was a dynamically allocated buffer being used to store sensitive data, and the intent of the programmer was sound: to limit the lifetime of this sensitive information by scrubbing it before returning it to the heap. However, the compiler optimizer noted that the contents of password were not being used after the call to memset, so it considered that call to be useless and then removed it entirely. The result was that the actual effect was the opposite of the intent: The sensitive data was returned to the heap unchanged.

Target architecture components

Of these causes of the WYSINWYX effect, the first – the choice of memory layout – is strongly related to the target architecture. Target architectures vary widely, and as there is no single widely accepted standard, they can all differ in the following respects:

- Sizes of primitive types. Not all architectures agree on the sizes of primitive types such as int. On some architectures they are four bytes, on others only two bytes.

- Alignment. Different architectures have different constraints on how types should be aligned. For example, on an x86 architecture, a double may be aligned on a four-byte boundary, but on sparc, they must be eight-byte aligned.

- Signedness. The C language has several types where signedness is implicit. The primitive char type, bit fields in structure types, and enumeration types are good examples. When the signedness is not specified explicitly in the source code, the compiler uses the default, which may be different on different architectures. This affects what happens when the type is converted into another type using a cast, or when the raw bit pattern is used.

- Bit fields. The treatment of bit fields is under-specified in the C language definition, so different compilers make different choices. One aspect of this is the position of bit fields within the structure and the padding used around them. Some compilers have options or pragmas to control this behavior.

Additionally, one particularly unpleasant defect that shows up in many C programs is the buffer overrun. To find these, a static analysis tool must accurately know the sizes of buffers. This is especially true of dynamically allocated buffers, as the actual sizes are not necessarily known until runtime. Due to the extensive use of casting in many C programs, it can be tricky for a static analysis tool to keep track of the actual size of a buffer. Often the actual size is different from the declared size. A good example of this is the following code snippet, which is idiomatic.

truct packet {

int len;

char data[1];

};

p = (struct packet *)malloc(sizeof(struct packet)+X);

memset(&p->data[0], '\0', X);

In this example, the user wants to have a structure representing a data packet of variable size, with X indicating the number of bytes in the payload. The declared length of the data field is only one byte, but the allocation means that the actual amount of space available for the payload is different. Assuming that an int is four bytes, and that three bytes of padding are introduced after the data field to make its size a multiple of four, then the size of struct packet will be eight and the size allocated to p will be X+8. So there are X+4 bytes available to be written to. Thus, the memset on line 7 does not constitute a buffer overrun, even though a naïve analysis of the types might indicate so.

This example illustrates the importance of taking into account the implications of the target architecture, as both the sizes of primitive types and the alignment of structures affect the actual amount of space available in the buffer.

Recommendations

Today’s advanced static analysis tools are effective at finding serious programming flaws and should be considered best practices for safety-critical software development. They are most useful when they are able to model the program being analyzed as accurately as possible. This means they should take into account aspects of the target architecture such as memory layout in order to thwart the WYSINWYX effect. The best tools do this by understanding how the compiler and the architecture work, and by selecting the right behavior automatically without requiring additional user input. In unusual cases where this is not possible, advanced static analysis tools, such as GrammaTech’s CodeSonar, also allow users to fine-tune these properties for themselves. This way, they can find subtle programming flaws that may otherwise evade detection. CS

GrammaTech, Inc.

607-273-7340

www.grammatech.com