A quiet revolution has been emerging within military embedded computing.

The trend toward Ethernet as the basic communications channel began about a decade ago, and now Ethernet is fairly universal in even the most hardened military environments. In its infancy, 10 Mbps or 100 Mbps Ethernet were common, and the move to 1 Gbps (1 GbE) was relatively slow. Recently, however, the move from 1 GbE to 10 GbE has begun to seem very rapid by comparison.

This change in the “need for speed” is, of course, driven by the applications that apply to that particular market niche. A major driver for this momentum is the very recent trend to put video input devices at many strategic points on a broad range of manned and unmanned land-, sea-, and air-based military vehicles to afford a view of the surroundings. This video view of the world is then passed on to many different operators, including those involved with navigation, course plotting, driving, and even weapons control.

Other trends are pushing the military market into faster networks as well. Many of the “traditional” military applications using various sensor arrays, radar, sonar, or other technologies are seeing the data rate requirements increase in solutions that used to be designed to operate with relatively low data throughput requirements. Recent trends with 300 MHz radar phased arrays with 32-bit wide data are well beyond the limits of current installed network technologies.

This trend to video everywhere – accelerated by Graphics Processing Units (GPUs) – means that there needs to be much more “raw” data capability within the system, and 10 GbE is well positioned to handle that. Of course, moving video streams around is only the first part of the answer. The occupants of a tank need to have 360-degree visibility of the outside environment, and preferably with night vision capability. So the next step is to turn that video stream into real intelligence. To accomplish this, the data stream must be managed to highlight movement, spot telltale patterns, track objects, and perform image enhancements. Thus, the requirement is twofold: to provide the processing power capable of acquiring and interpreting data – and to move that information as quickly as possible to its destination.

GPGPU: An intelligence game changer

The use of GPUs has rapidly moved from the gaming market to the C4I market. The GPU’s ability to perform massively parallel processing makes it well suited for extracting useful information from video streams. Now that General Purpose GPU (GPGPU) methodologies (including CUDA and OpenCL) are becoming mainstream, the ability to process visual information into meaningful intelligence – and to communicate it via a high-speed network protocol such as Ethernet – is taking a major step forward. The ability to combine GPGPUs with high-end, mainstream CPUs opens up the way for many practical solutions in the battlefield arena.

It is relatively easy to imagine video streams coming from dozens of cameras going to monitors for human operators as well as to multiple processing clusters. In each cluster, GPGPUs would process the streams, patching together visual images, extracting clues, and linking with CPUs to feed into the combined intelligence system. After analysis, the next step might be to remix the streams, superimposing the captured raw video streams with information.

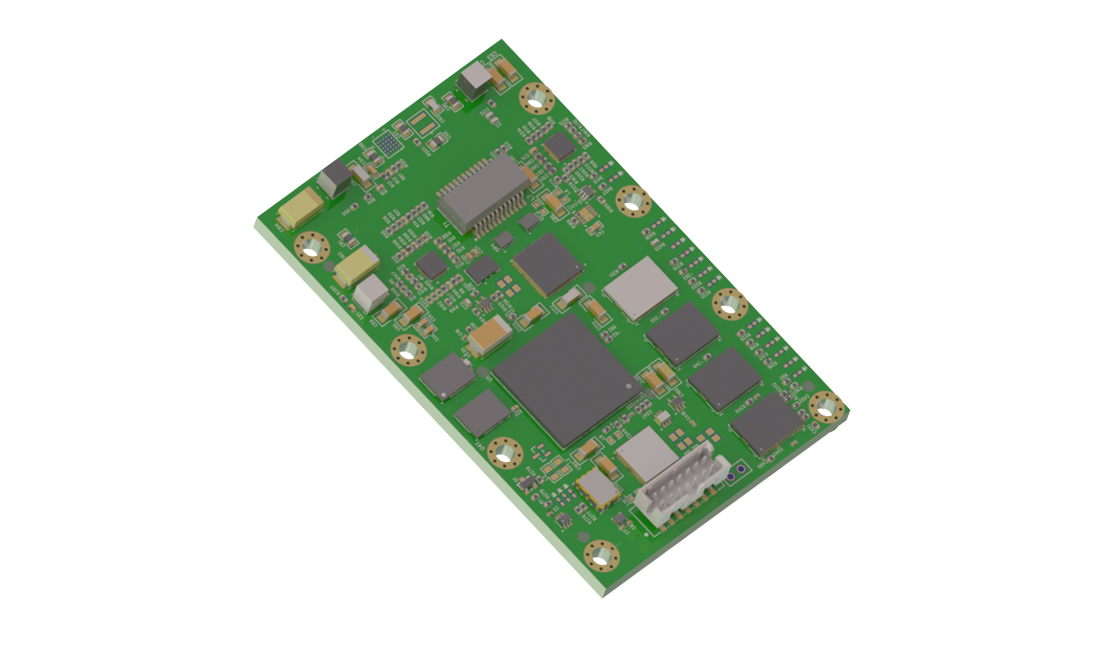

There are even cases where the visual stream needs to be heavily analyzed within an enclosed system, with only a tiny portion of that ever getting passed to any operator. A good example of this is a UAV that uses video information for its fine-grained navigation (Figure 1). Here, the video stream is passed to a series of GPGPU/CPU combination, doing tasks ranging from geographic recognition to control flight. If the visual information is of sufficient quality, image processing techniques and GPS information can allow for passive flying with no need for ground radar.

Ethernet, with its ability to broadcast, multicast, or segment into virtual LANs, provides a good backbone for military video data traffic. It also allows for bringing together mismatched data rates, with some devices running at Kbps speeds and others at Mbps. This flexibility allows Ethernet to be used as the one common media to carry all data. In some cases, critical and non-critical data can be segregated through the use of VLANs or Quality of Service methods. Of course, introducing video into the total data stream creates a traffic load in the multiple mbits/second range comprising raw video streams, tracking information, and normal command and control data. This is exactly the scenario for the introduction of 10 GbE. When we also consider the complexity of the types of data distributed, we see the need to configure and control this data using 10 GbE switches.

We can do a few simple sums to see the impact of video on data rates. Let’s assume we’re talking about a data rate of 200 Mbps per camera (say, for 10-bit color, standard definition, and 25 frames/sec). Twelve of these cameras on a vehicle represent 2.4 Gbps of inbound data stream. Maybe a year or so ago, the way to handle this was to “squeeze” it into 1 GbE networks. We could get away with 1 GbE, providing the setup was quite simple, with one camera streaming to one monitor. Compression techniques such as H.264 could also be applied, and that gave us some headroom to cope on 1 GbE networks. But we are already pushing the limits of this networking technology. Moreover, there are applications in which compression might not be acceptable.

The time has come for 10 GbE

Therefore, 10 GbE is the next logical step in this progression. A 10 GbE network can be considered as being able to handle 50 camera feeds, in terms of raw bit rates: 200 Mbps * 50 = 10 Gbps. Obviously the calculations for any network planning are a lot more complex than this, but the tenfold increase in speed is very important.

As such, expect to see military vehicles that are “all-seeing,” with many video feeds distributing this video information to operators and to highly sophisticated video analysis systems based on combinations of GPGPUs and high-powered CPUs. These will, in turn, supplement the video with “heads up” information of different types to various operators, with all of this made possible by 10 GbE networks throughout the vehicle.

The physical topology of this sort of system differs widely depending on the type of vehicle. In the simplest case – such as a lightweight UAV, perhaps – we can image all video cameras feeding into one central box, where all the GPGPUs and CPUs communicate across a high-speed backplane that itself might be designed around a 10 GbE switch. In other cases, the video processing might be physically separated from the command and control systems. Groups of cameras can feed small switches that aggregate the 1 GbE feeds into 10 GbE cables (possibly using redundant links) to a series of GPGPU/CPU systems, each supporting a specific functionality. Having 10 GbE available in backplane, copper, or fiber cabling would allow the computing solution to be tailored to the physical conditions required by the vehicle.

Of course, all of this needs to meet the usual military levels of ruggedization. 10 GbE technology is only now reaching the level of acceptance where it is possible to consider putting it into these harsh environments. And 10 GbE usage in rugged environments is emphasized by recent developments in the VITA standards arena. OpenVPX provides a number of options for using 10 GbE across the VPX backplane. A number of profiles are supported, with 10 GbE or a combination of 10 GbE and 1 GbE in a “data plane” and “control plane” split (terminology creeping in from the telco world). GE’s 24-port 10 GbE GBX460 switch (Figure 2) supports two of these OpenVPX profiles, with a typical application being the connection of GPGPUs and high-powered conventional processors in CUDA clusters.

Beyond 10 GbE

There is already talk of military platforms going to HD-quality cameras or scaling up the number of cameras, and maybe even 3D analysis techniques. Let’s assume it’s not going to be too long before 40 GbE and 100 GbE networks are the norm in the military. In fact, it might be that the military market becomes as bandwidth-hungry as the telco market has become, given the proliferation of video as the primary consumer of network bandwidth.

John N. Thomson is Software Engineering Manager at GE Intelligent Platforms. He has been involved in network software for three decades and worked with a range of companies from Burroughs Machines to McDonnell Douglas, with roles ranging from standards development to technical marketing. He can be contacted at [email protected].

GE Intelligent Platforms

www.ge-ip.com